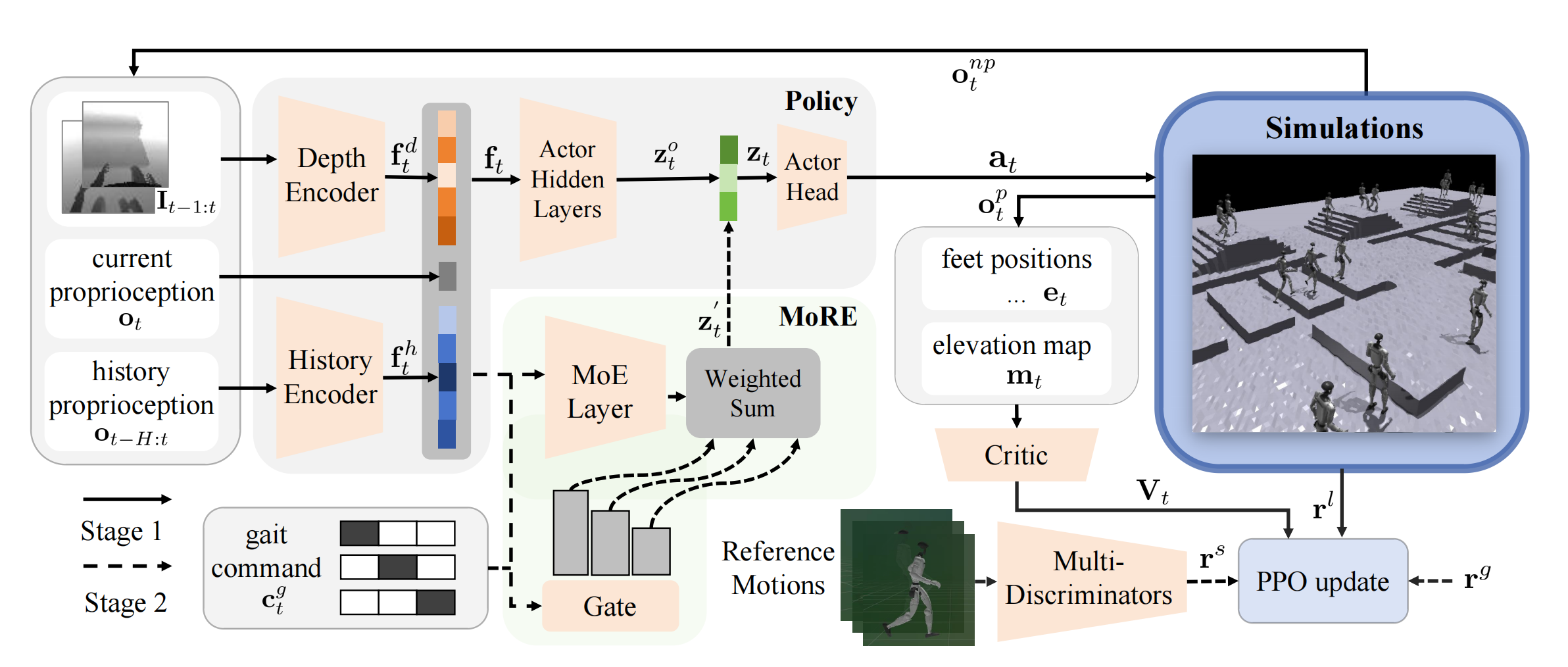

Humanoid robots have demonstrated robust locomotion capabilities using Reinforcement Learning (RL)-based approaches. Further, to obtain human-like behaviors, existing methods integrate human motion-tacking or motion prior in the RL framework. However, these methods are limited in flat terrains with proprioception only, restricting their abilities to traverse challenging terrains with human-like gaits. In this work, we propose a novel framework using mixture of latent residual experts with multi-discriminators to train a RL policy, which is capable of traversing complex terrains in controllable lifelike gaits with exteroception. Our training pipeline, containing two stages, first teaches the policy of traversing complex terrains using a depth camera, then enables gait-commanded switching between human-like locomotion patterns. We also design gait rewards to adjust human-like behaviors like robot base height. Simulation and real-world experimental results demonstrate that our framework exhibits exceptional performance in traversing complex terrains, and achieves seamless transitions between multiple human-like gait patterns.

@misc{wang2025moremixtureresidualexperts,

title={MoRE: Mixture of Residual Experts for Humanoid Lifelike Gaits Learning on Complex Terrains},

author={Dewei Wang and Xinmiao Wang and Xinzhe Liu and Jiyuan Shi and Yingnan Zhao and Chenjia Bai and Xuelong Li},

year={2025},

eprint={2506.08840},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2506.08840},

}